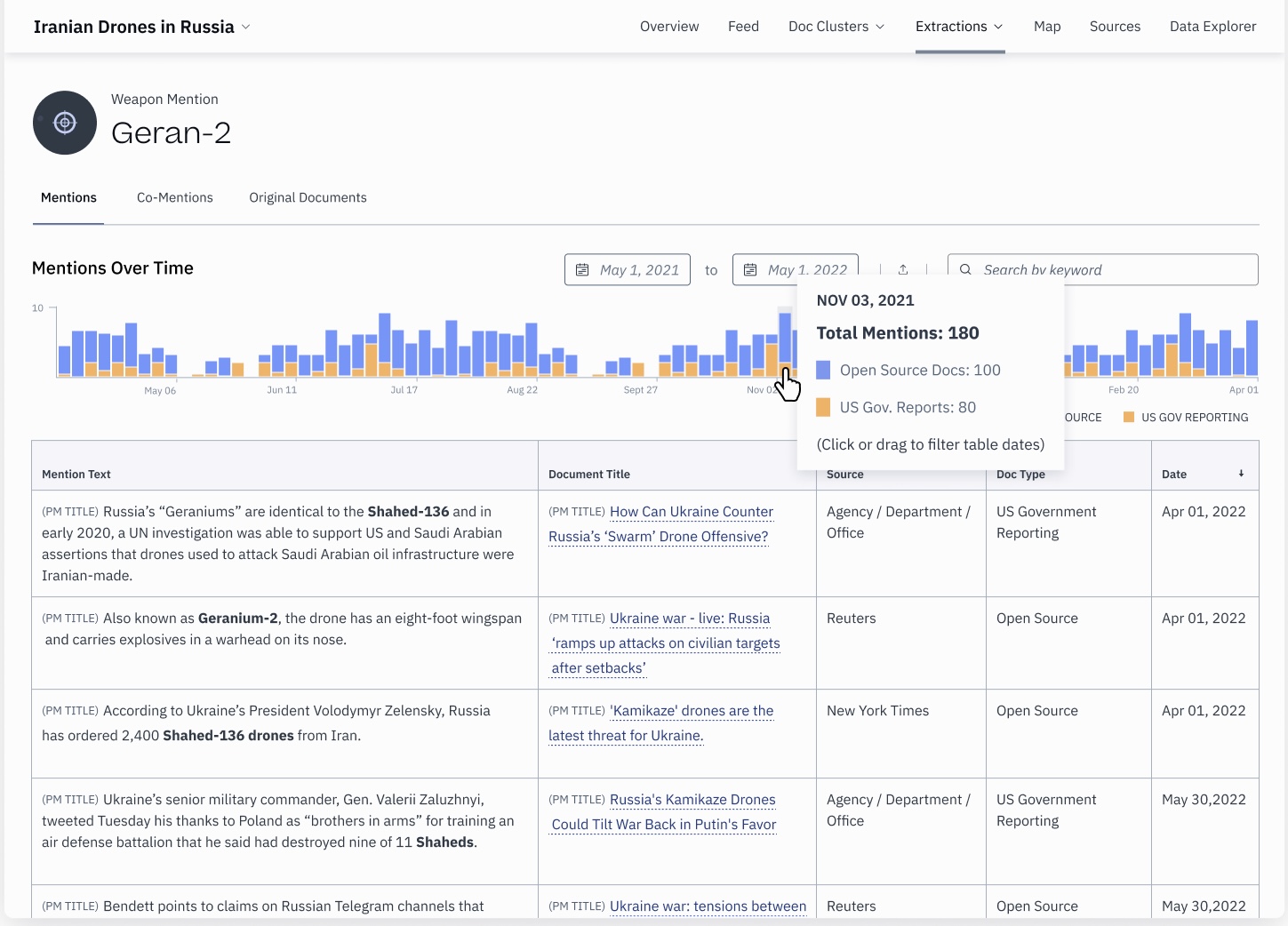

For decision makers in national security seeking a competitive edge, understanding the latest advancements in Natural Language Processing (NLP) is critical. Enter Large Language Models (LLMs), a powerful form of AI that can extract, translate, summarize, and answer free-form questions to support critical tasks such as threat detection and strategic analysis. With the help of LLMs, tasks that used to take hours, days, or even weeks can now be accomplished with high fidelity in minutes or seconds. This frees up analysts and operators to focus on their expertise and helps accelerate intelligence and decision-making cycles.

Utilizing LLMs effectively requires a deep understanding of their capabilities and limitations. As experts in building and deploying NLP tools for national security, we can help navigate this complex landscape to ensure successful integration of LLMs into your operations.

Which language model is right for your use case?

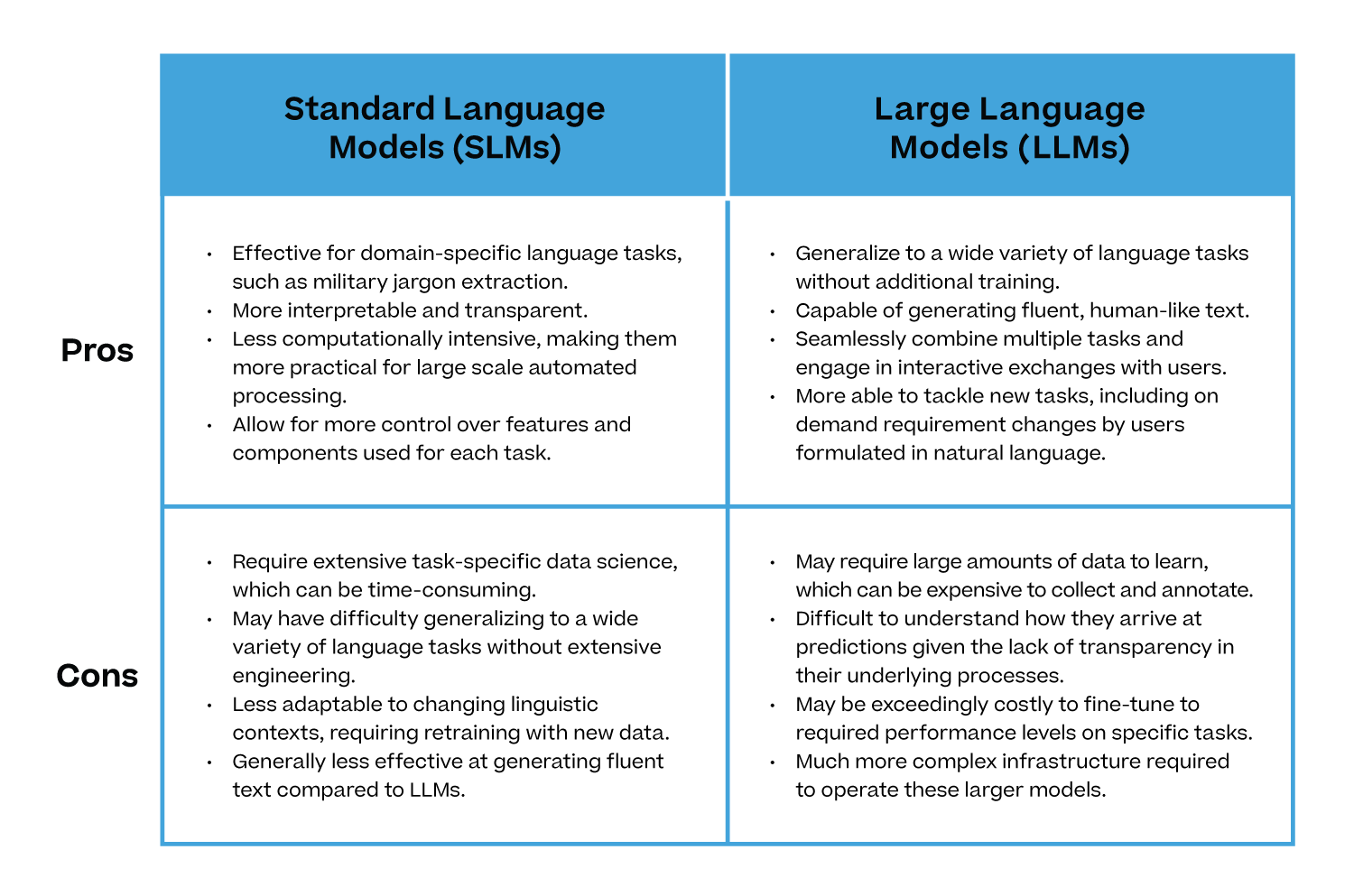

Traditional NLP models, which we will refer to here as Standard Language Models (SLMs), refer to a range of models that span rule-based or statistical methods to smaller neural net models to analyze and process language data. These models typically require extensive domain knowledge, manual engineering, and/or fine-tuning before they can achieve human level performance.

In contrast, LLMs are developed using deep neural networks which are orders of magnitudes greater than any others. These models automatically learn from large amounts of text data, saving time and human labor but typically cost more to train. LLMs, such as the GPT-3.5-turbo model that powers the ChatGPT application, are capable of generating human-like text, answering questions, and performing a variety of language tasks.

A comparison of the pros and cons of each are summarized in the table below.

National Security Considerations

When adopting cutting-edge technologies like LLMs, National Security organizations need to balance several considerations, including accuracy, cost, speed, and explainability. Unfortunately, there are no hard and fast rules for determining the right model for you. The appropriate model depends on the specifics of your use case and will require further scoping.

- Accuracy: LLMs are capable of extracting certain information that SLMs cannot, which can provide a critical advantage in certain scenarios. Yet, when not appropriately tuned to your use case, wrong information from LLMs without a human in the loop may impact missions.

- Cost: Running LLMs is costly, and may exceed available budgets. In certain cases, it will make more sense to deploy a smaller LLM model or use an SLM instead.

- Explainability: It is essential to understand the provenance of certain information to assess confidence in the data. LLMs may not always provide citations or may require additional engineering to incorporate source citations.

Fine-tuning: Models Optimized on Your Data

While a tool like ChatGPT is a great generative AI application, it is not tailored to DoD and IC mission requirements, nor can it be leveraged on your secure network. What is required is an LLM or SLM that is highly performant on your data and deployed in your preferred environment. To achieve this, LLMs and SLMs alike need to be “fine-tuned” on your data.

For this, you take a model that has already been generally pre-trained and fine-tune it on your data to improve performance. For example, fine-tuning the model to understand IC as ‘Intelligence Community’ instead of ‘Integrated Chips’ or ‘Intensive Care’. Fine-tuning takes advantage of what the model has learned already without developing it from scratch.

“The future of LLMs will be open source models trained on customer specific data and securely deployed within the customer’s own cloud infrastructure.”

Sean Gourley, CEO at Primer

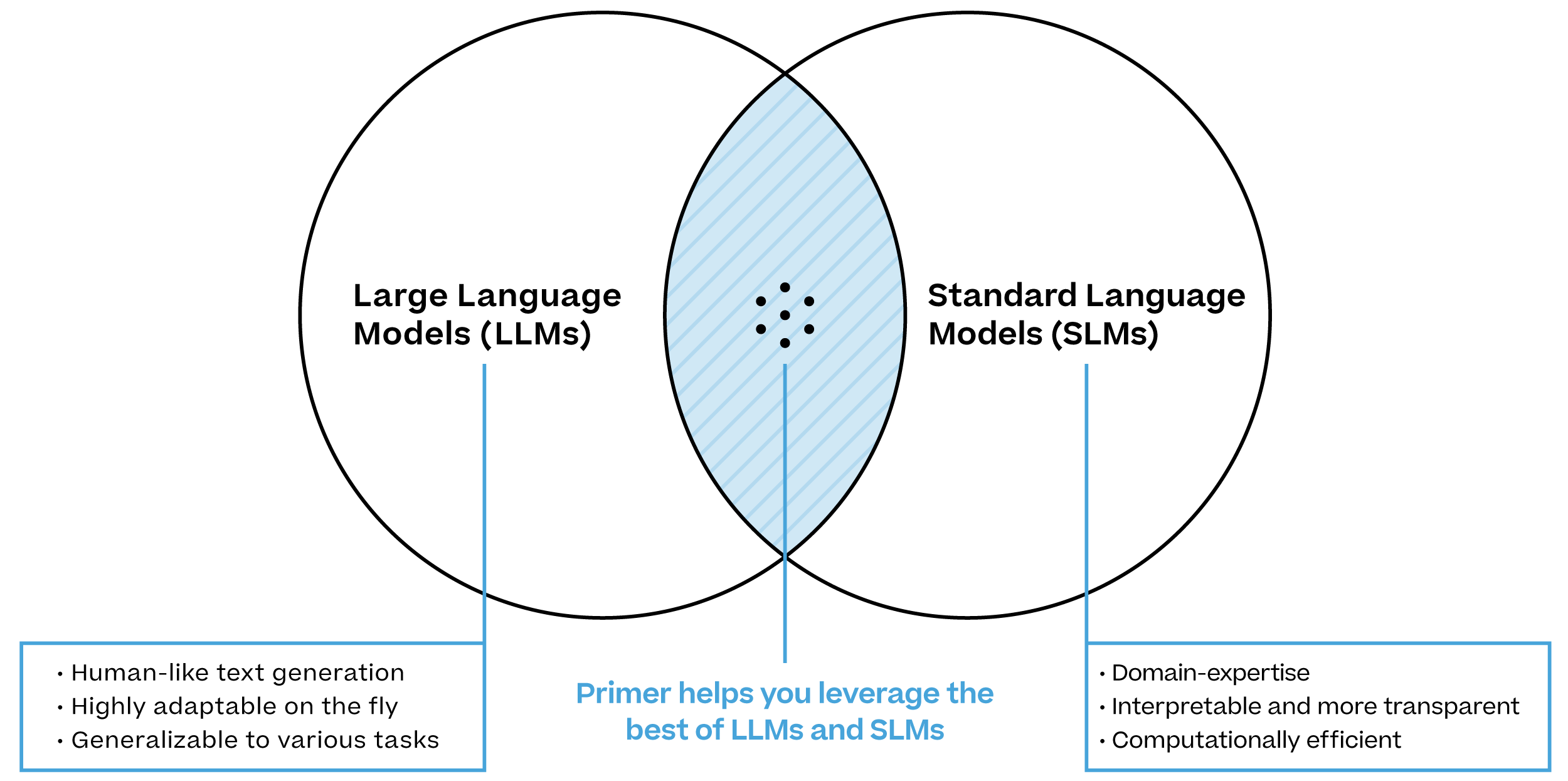

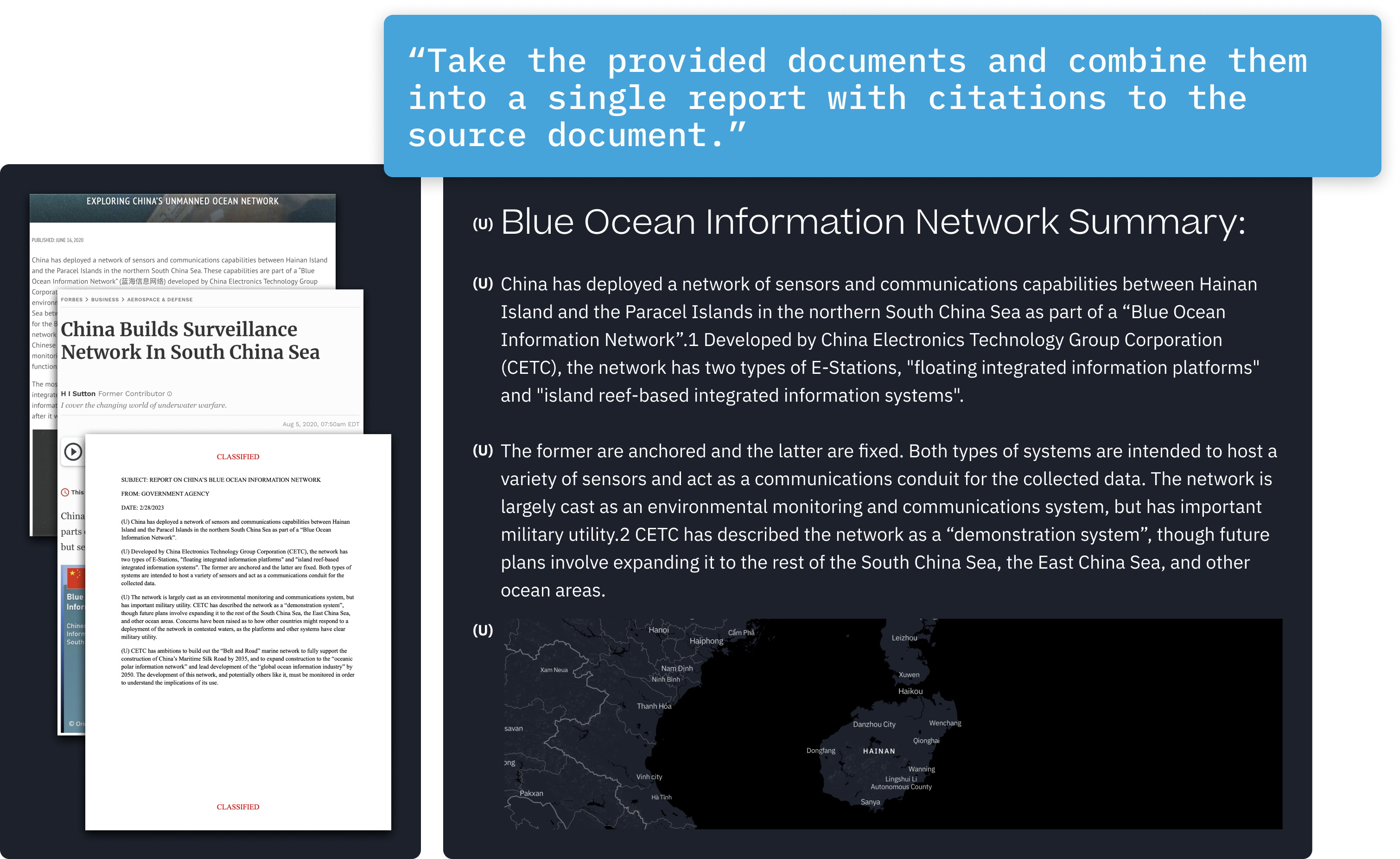

Primer: Improving Workflows with Advanced NLP

Primer’s cutting-edge NLP technology empowers analysts and operators to achieve what was previously infeasible. Our AI platform is designed to unlock previously hard to automate parts of your daily workflows, giving you the ability to discover rich insights and make informed decisions at unparalleled speed. With our solutions, you can fine-tune and deploy standard and large language models on your sensitive data in your secure environment. Connect your custom models to our AI-generated knowledge base or your own source of truth for powerful enterprise-wide and access-controlled querying in natural language – no code required.

As a trusted AI partner for National Security agencies, Primer is committed to delivering solutions that meet your specific needs and drive real mission impact. Our products are user-friendly for non-technical users, and our technology continually improves through R&D and human feedback loops. Whether you’re looking to enhance your workflows, fine-tune your models, or access your institution’s knowledge and open source information in a whole new way, Primer’s AI-powered solutions are here to help.

At Primer, we build and deploy mission-ready AI applications that meet rapidly evolving defense and security needs. Check out our products or contact us to learn more.