Sometime in 2014, Bastian Obermayer, a reporter working for the Süddeutsche Zeitung newspaper, was sent 11.5 million leaked documents about off-shore financial operations, later known as the Panama Papers. It took over a year of analysis by journalists in 80 countries to dig into this immense corpus.

Making sense of the contents of a large set of unknown documents is relevant to many industry applications. In investigative journalism or intelligence operations, quickly identifying the most important documents could be a make-or-break effort. Other times, understanding an emerging or evolving domain can be valuable. For example, imagine mapping new debates on teenage use of digital devices and mental health, or the evolution of topics covered over the decades by popular newspapers.

We developed a prototype pipeline to help address this broad use case as part of our work with Vibrant Data Labs. The pipeline is designed to ingest an arbitrary set of documents, produce a hierarchical visualization of the contents, and finally make the corpus searchable by tagging each document with both specific and broad keywords.

In this post, we’ll present the pipeline’s methodological design. As we’ll see, its harnessing of the power of deep NLP models, both open-source as well as available on Primer Engines, opens up new ways of tackling text analysis tasks.

Task overview

The task requires carrying out three main steps.

First, we need to understand what each document is about. Next, we want to look across the corpus to get a big-picture view of what it covers. Importantly, we’ll want to determine which domains are broad and which are more specific. Finally, we want to use this hierarchical lens to tag the documents in a consistent manner, thereby exposing them for search.

Let’s look at each step in turn.

Document representation

Document representation means converting a text document to a vector representing what the document is about. Commonly used approaches include applying a TF-IDF transformation or calculating document embeddings.

Both approaches, however, have some severe shortcomings for our purpose. TF-IDF offers interpretable representations but ignores semantic similarity. Document embeddings understand such semantics, but come at the price of opaque, and therefore not searchable, document representations.

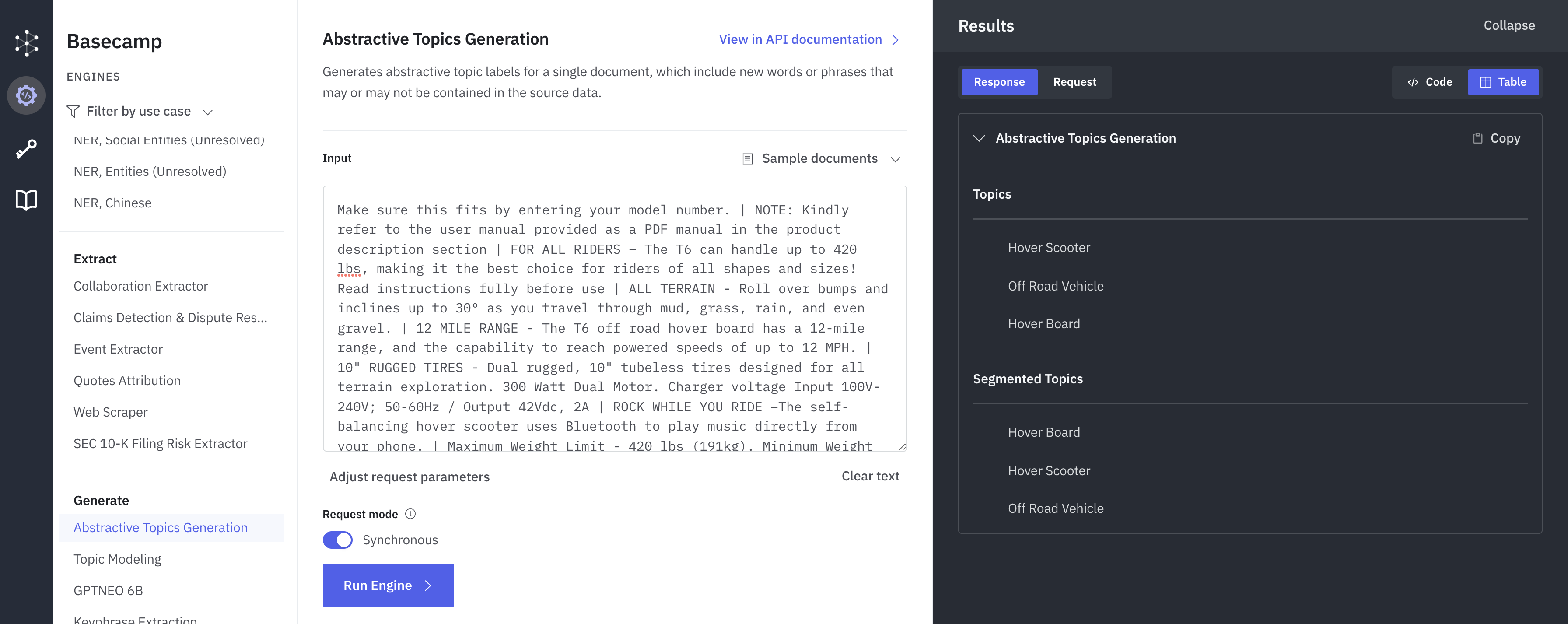

Our solution uses Primer’s Abstractive Topic Generation Engine. Its power comes from its understanding of both semantics and context, offered by its underlying deep language model, combined with the generation of plain-language outputs.

With this Engine, we are able to dramatically cut through the complexity of free text and reduce documents to a handful of selected, highly-relevant, and intelligible topic labels.

Get a high-level view across documents

The next challenge is going from the individual document representations to a zoomed out view of the corpus content.

Traditionally, one could tackle this by clustering similar documents together or using topic modelling techniques like Latent Dirichlet Allocation.

We take a different approach. Instead of grouping the documents, we work on the extracted topic terms and learn the relations in that set. To do so, we carry out two simple steps using off-the-shelf tools:

- To measure semantic distance between terms, we project these into a vector embedding space using SentenceBERT, an open-source sentence embedding model.

- We use agglomerative clustering – a bottom-up hierarchical clustering method – to extract a tree data structure connecting related terms into ever broader groups sharing semantic proximity.

This is how the pipeline can learn that ‘washing machine’ and ‘dishwasher’ are related, as are ‘oven’ and ‘microwave’, and as we look across a wider semantic radius, these will eventually fall in a common group.

Understanding the hierarchy across domains is key to making the corpus searchable, as user queries will range from specific to broad.

One crucial step is still missing though: how do we label this broader group? The richness of deep language models comes to our aid here. We’ve found that simply selecting the term that is most similar – based on embedding similarity – to the other terms in the group yields a pretty good representative item.

Let’s look at the concrete example below. Strikingly, the term ‘saucepan’ which combines elements of both pots and pans, indeed emerges as the most central term. On the other hand, ‘wok’ and ‘teflon pan’ , which can be thought of as specific types of pans, are at the bottom of the ranking as representative terms.

| Rank | Term | Centrality Score |

| 0 | saucepan | 3.180589 |

| 1 | pan | 3.028817 |

| 2 | pot | 2.984785 |

| 3 | wok | 2.769623 |

| 4 | teflon pan | 2.728998 |

Moreover, the selected representative terms become more conceptually broad and abstract as we seek to label more diverse groups of terms. We can see this behavior in the examples below, wherein the more abstract term is chosen as the most representative when set alongside two related but semantically distinct terms.

| Rank | Term | Centrality Score |

| 0 | kitchen | 1.787934 |

| 1 | microwave | 1.749099 |

| 2 | pan | 1.659810 |

| Rank | Term | Centrality Score |

| 0 | dining | 1.893527 |

| 1 | plates | 1.890686 |

| 2 | table cloth | 1.887701 |

By virtue of this feature, these two simple steps allow building a structured view over topic space in the corpus, offering both narrow and broad perspectives.

Search documents using hierarchical relationships

Finally, we can proceed to tag the original documents and power the search functionality. Starting from the original document topic labels, we use the relationships in the term tree to ensure each document is also linked to corresponding, more abstract, domains. For example, depending on the extracted hierarchy, ‘microwave’ could also be tagged as ‘cooking’, ‘household items’, ‘consumer durables’, ideally with a declining relatedness score as we move further up these abstractions. Notably, this means that microwave products would now be picked-up in searches for both microwaves specifically as well as household appliances in general.

Augmenting insights into your data with deep NLP

We’ve tried this tool on different types of datasets and found it can provide valuable initial insights out-of-the-box. We’ve played around with book blurbs, news documents, academic papers on COVID-19, and documents made public by the CIA. In all cases, we were able, at a minimum, to get an immediate understanding of what the documents were about and to have a way of searching the documents we were most interested in.

Clustering documents or extracting topics are not new tasks in the domain of unsupervised learning. However, the above workflow differs from these traditional approaches by drawing on the vast additional insight offered by deep language models, such as the Abstractive Topics Engine and SentenceBERT. Without language models, one would be limited to making sense of documents only based on the distribution of features in the local corpus. Instead, modern NLP can interpret documents, even in small datasets, using the understanding gained over the vast training corpus that is embedded in the language model itself.

This is the design choice of the Primer Engines, where powerful NLP models are exposed via an API to support the creation of composable NLP pipelines on customer documents.

Further reading

SentenceTransformers Documentation

Agglomerative Clustering example, Wikipedia

Agglomerative Clustering, Scikit-learn User Guide

AgglomerativeClustering implementation, Scikit-learn Documentation

Original paper introducing Hierarchical Latent Dirichlet Allocation

We create the tools behind the decisions that change the world. ©2022 Primer