Is there really one NLP language model to rule them all?

It has become standard practice in the Natural Language Processing (NLP) community. Release a well-optimized English corpus model, and then procedurally apply it to dozens (or even hundreds) of additional foreign languages. These secondary language models are usually trained in a fully unsupervised manner. They’re published a few months after the initial English version on ArXiv, and it all makes a big splash in the tech press.

In August of 2016, for example, Facebook released fastText (1), a speedy tool for word-vector embedding calculations. Over the next nine months Facebook then released nearly 300 auto-generated fastText models for all the languages available on Wikipedia (2). Similarly, Google debuted its syntactic parser, Parsy McParseface (3) in May of 2016, only to release an updated version of the parser trained on 40 different languages later that August (4).

You might wonder whether multilingual NLP is thus a solved problem. But can English-trained models be naively extended to supplementary non-English languages, or is some native-level understanding of a language required prior to a model update? The answer is particularly pertinent to us here at Primer, given our customers’ focus on understanding and generating text across a range of multilingual corpuses.

Let’s begin exploring the problem by considering one simple NLP model type; word2vec-style geometric embedding (5). Word vectors are useful for training a variety of text classifier types, especially when the volume of properly labeled training data is lacking (6). We could for instance use Google’s canonical news-trained vector model (7) to train a classifier for English sentiment analysis. We would then extend that sentiment classifier to incorporate other languages; such as Chinese, Russian, or Arabic. That would require an additional set of foreign vectors, compiled in either an automated manner or through manual tweaking by a native speaker of that language. For example, if we were interested in Russian word embeddings, we could choose from either Facebook’s fastText automated computations, or from the Russian-specific RusVectores results (8), which have been calculated, tested, and maintained by two Russian-speaking graduate students. How would those two sets of vectors compare? Let’s find out.

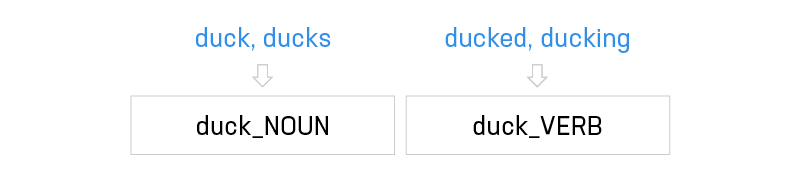

RusVectores offers a multitude of vector models to choose from. For our analysis, let’s select their Russian news model (9), which has been trained across a corpus of nearly 5 billion words from over three years worth of Russian news articles. Despite the massive corpus size, the vector file itself is only 130 MB, which is one-tenth the size of Google’s canonical news-trained word2vec model (7). Partially, the discrepancy in size is due to the reduction of all Russian words to their lemmatized equivalence, with part-of-speech tag appended by an underscore. This strategy is similar to the recently published Sense2Vec (10) technique in which the varied usage of a word,

such as for example “duck”, “ducks”, “ducked” and “ducking”, gets replaced by a single lemma/part-of-speech combination, such as “duckNOUN” or “duckVERB”.

Simplifying the vocabulary through lemmatization is more than just a trick to reduce dataset size. The lemmatization step is actually critical for the performance of the embedded vector in Russian (11). In order to understand why lemmatization is required, one need only look at the unusual role that suffixes play in Russian grammar.

Russian, like most languages, disambiguates the usage of certain words by changing their endings based on grammatical context. This process is known as inflection, and in English we use it to signal the proper tense of verbs.

The inflection is how we know that Natasha’s purchase of vodka occurred in the past rather than the present or future. English nouns can also undergo inflection, but only for instances of plurality (“one vodka” vs “many vodkas”). In the Russian, however, noun inflection is significantly more prevalent. Russian word-endings help convey critical noun-related information, such as which nouns are the subjects and which are the objects within sentences. In English, such grammatical context is expressed through word order alone.

The meanings of these two sentences are quite different, even though the words in the English sentences are identical. The Russian sentences, on the other hand, rely on inflection rather than word-order to communicate the noun relationships. Russian sentence A is the direct translation of English sentence A, and a simple suffix swap generates the nonsensical Sentence B. The relation between Natasha (Наташа) and the vodka (водка) is signaled by her suffix, not her position in the sentence.

The Russian dependence on suffixes leads to a higher total count of possible Russian words relative to English. For instance, let us consider the following set of phrases: “I like vodka”, “Give me vodka”, “Drown your sorrows with vodka”, “No more vodka left!”, and “National vodka company”. In English, the word vodka remains unchanged. But their Russian equivalents have multiple versions of the word vodka:

Our use of vodka changes based on context, and instead of a single English vodka to absorb we now have four Russian vodkas that we must deal with! This suffix-based redundancy adds noise to our vector calculations. Vector quality will suffer unless we lemmatize.

With this in mind, let’s carry out the following experiment; we’ll load the RusVectores model using the python Gensim library (12) (13) and execute the similarbyword function on “водкаNOUN” (vodkaNOUN) to get the top ten words that are closest, in Russian vector space, to vodka.

We execute the experiment using the following set of simple python commands:

from gensim.models import KeyedVectors

word_vectors = KeyedVectors.load_word2vec_format('rus_vector_model.bin', binary=True)

for word, similarity in word_vectors.most_similar(u'водка_NOUN', topn=10):

print wordThe results (and their translations) read as follows:

This output is sensible. Most of the nearest words pertain to forms of alcohol, at least. Still, as a sanity-check, let us compare the Russian output to the top-10 nearest vodka-words within Google’s news-trained vector model (7): That ordered output is as follows:

The five highlighted alcoholic beverages also appear within the Russian results. Thus, we have achieved consistency across the two vector models. A toast to that!

But now let’s repeat the experiment using Facebook’s Russian fastText vectors. The first thing we observe, even prior to loading the model, is that Facebook’s vector file (15) is 3.5G in size—more than twice as big as that of Google. The difference in file size makes itself known when we load the model into Gensim. The Facebook Russian vectors take over two minutes to load on a state of the art laptop. By comparison, the RusVectores model takes less than 20 seconds.

Why is the Facebook model so incredibly large? The answer becomes obvious as we query the ten nearest words to vodka. In the Russian fastText model they are as follows:

Eight of them represent a morphological variation of the vodka poured into fastText. Additionally, certain common Russian-specific Unicode characters (such as the » arrow designating the end of a Russian quotation) have erroneously been added to crawled words. The redundancy of Facebook’s Russian vocabulary thus increases the size of the vector set.

In order to carry out a fair comparison with the RusVectores, we’ll need to use lemmatization in order to filter the redundant results. The top ten non-repeating nearest words in the Russian fastText model are:

Shockingly, half these results correspond non-alcoholic drinks. How disappointing!

It seems that Facebook’s one-size-fits-all approach to model training performs quite mediocrely on Russian text. But hey, it provided a decent starting place. Certain auto-trained word vector models generate outputs that border on the absurd. Take for example Polyglot (16), which offers models trained on Wiki-dumps from 40 different languages, producing these outputs for vodka’s nearest-neighbors:

Some language models should not be blindly trained on input data without first taking all the nuances of the language into account. So use and train these models in moderation, rather than downing 40 languages all in one go. Such model abuse will lead to a headache for you and all your international customers. Instead, please take things slowly in order to appreciate the beautiful distinctions of each language, like one appreciates a fine and savory wine.

References

- https://research.fb.com/fasttext/

- https://github.com/facebookresearch/fastText/blob/master/pretrained-vectors.md

- https://research.googleblog.com/2016/05/announcing-syntaxnet-worlds-most.html

- https://research.googleblog.com/2016/08/meet-parseys-cousins-syntax-for-40.html

- https://blog.acolyer.org/2016/04/21/the-amazing-power-of-word-vectors/

- http://nadbordrozd.github.io/blog/2016/05/20/text-classification-with-word2vec/

- http://mccormickml.com/2016/04/12/googles-pretrained-word2vec-model-in-python/

- http://rusvectores.org/en/models/

- http://rusvectores.org/static/models/news0300_2.bin.gz

- https://explosion.ai/blog/sense2vec-with-spacy

- http://www.dialog-21.ru/media/1119/arefyevnvetal.pdf

- https://radimrehurek.com/gensim/

- https://radimrehurek.com/gensim/models/keyedvectors.html

- https://radimrehurek.com/gensim/models/word2vec.html#gensim.models.word2vec.Word2Vec.similarbyword

- https://s3-us-west-1.amazonaws.com/fasttext-vectors/wiki.ru.zip

- https://pypi.python.org/pypi/polyglot